GitHub Actions vs GitLab CI/CD: How to Build CI/CD Pipelines in Each

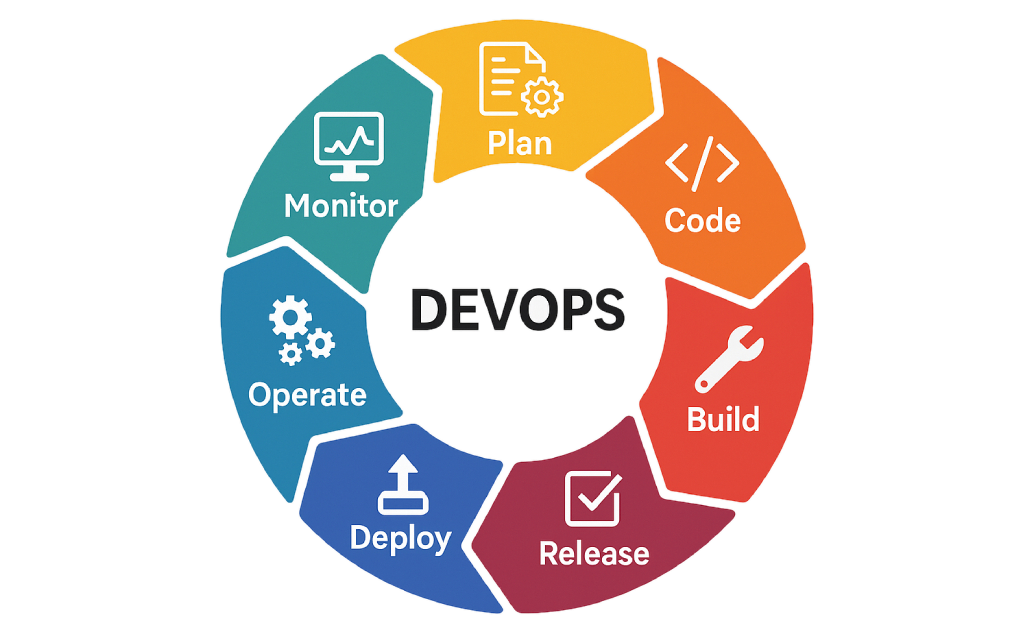

Modern software development thrives on automation — and CI/CD pipelines are the engine behind fast, reliable, and frequent software delivery. If you’re hosting your code on GitHub or GitLab, you already have access to powerful native CI/CD tools: GitHub Actions (built into GitHub) GitLab CI/CD (built into GitLab) But how do they compare? And how do you build a CI/CD pipeline in each? Let’s break it down. What is CI/CD (Quick Recap)? CI (Continuous Integration): Automatically builds and tests code when you push changes. CD (Continuous Delivery/Deployment): Automatically prepares and/or deploys your code to staging or production. CI/CD pipelines: Reduce human error Speed up delivery Improve software quality Both GitHub Actions and GitLab CI/CD are automation engines that make this possible — directly in your code hosting platform. GitHub Actions vs GitLab CI/CD (Quick Comparison) Feature GitHub Actions GitLab CI/CD Integration Native to GitHub Native to GitLab Pipeline File Name .github/workflows/*.yml .gitlab-ci.yml Free Tier 2,000 minutes/month (private) 400 minutes/month (free plan) Runners (Agents) GitHub-hosted or self-hosted GitLab-hosted or self-hosted UI Experience Modern and integrated Powerful and robust Flexibility High, with matrix builds High, supports advanced DAGs Best For GitHub-based projects GitLab-based codebases How CI/CD Works in GitHub (GitHub Actions) Step-by-Step Setup Create Workflow FolderInside your GitHub repo, create: .github/workflows/ Add a Workflow File <pre><code># File: .github/workflows/ci.yml name: CI Pipeline on:push:branches: [ main ]pull_request:branches: [ main ] jobs:build:runs-on: ubuntu-latest steps:– uses: actions/checkout@v3 – name: Install dependenciesrun: npm install – name: Run testsrun: npm test</code></pre></div> 3. Push Code Every time you push code or open a pull request, the pipeline will run and appear in the Actions tab of your GitHub repo. How CI/CD Works in GitLab (GitLab CI/CD) Step-by-Step Setup Add .gitlab-ci.yml FilePlace this file in the root of your GitLab repo: <pre><code># File: .gitlab-ci.yml stages:– build– test build_job:stage: buildscript:– npm install– npm run build test_job:stage: testscript:– npm test</code></pre> Push Code GitLab automatically detects the .gitlab-ci.yml file and kicks off the pipeline. View PipelineYou can view real-time pipeline status under the CI/CD > Pipelines section in your GitLab project dashboard. Which Should You Use? Use Case Recommended Platform Your repo is hosted on GitHub GitHub Actions Your repo is hosted on GitLab GitLab CI/CD You need free and easy pipelines GitHub (for public repos) You want advanced pipeline graphs GitLab You like YAML automation in PRs GitHub You need tight GitLab ecosystem (issues, merge requests, etc.) GitLab Tips for Both Platforms Use secrets: Store API keys and passwords securely in GitHub/GitLab secrets. Use caching: Speed up pipelines by caching node_modules, vendor, or build folders. Break into jobs: Use separate jobs for linting, building, testing, and deploying. Add notifications: Integrate with Slack, Discord, or email to receive status alerts. Final Thoughts Whether you use GitHub Actions or GitLab CI/CD, the key is this: Automate your delivery pipeline early. It saves time, prevents bugs, and keeps your team moving fast. Both platforms are powerful, customizable, and offer free usage tiers that are perfect for solo developers, open-source maintainers, and teams of all sizes. Reads also: Who Is a DevOps Engineer? Understanding the Role Behind Smooth Software Delivery What Is CI/CD? A Complete Guide to Continuous Integration and Continuous Delivery External Resources: GitHub Action vs GitLab

GitHub Actions vs GitLab CI/CD: How to Build CI/CD Pipelines in Each Read More »